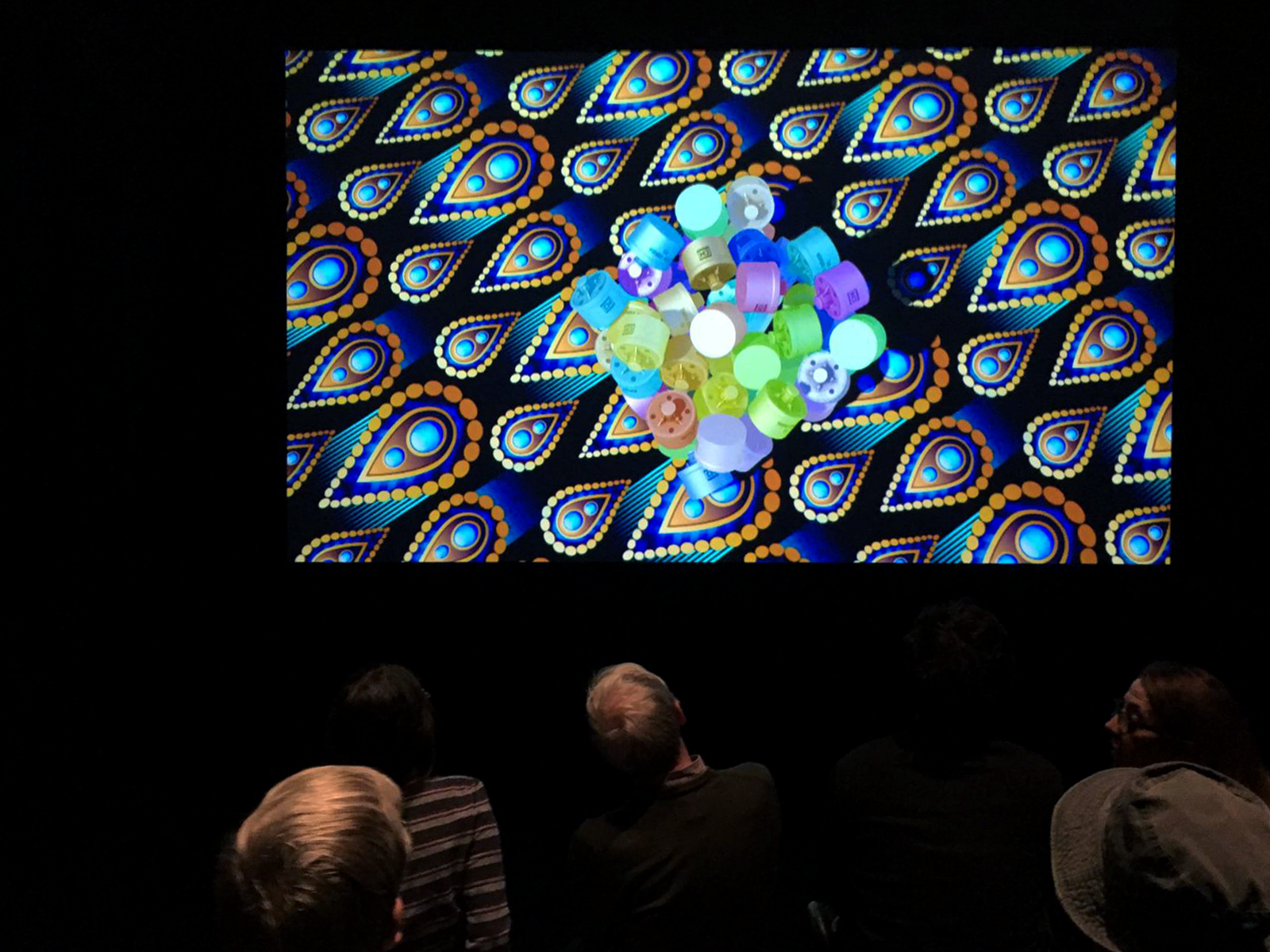

For our contribution to the 2019 Whitney Biennial at New York’s Whitney Museum of American Art, we developed a machine learning and computer vision workflow to identify tear gas grenades in digital images. We focused on a specific brand of tear gas grenade: the Triple-Chaser CS grenade in the catalogue of Defense Technology, which is a leading manufacturer of ‘less-lethal’ munitions.

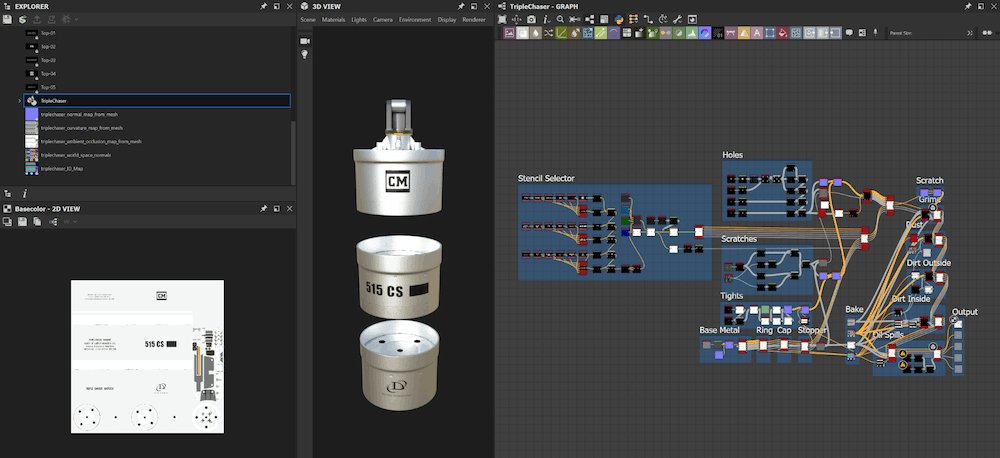

Developing upon previous research, we used ‘synthetic’ images generated from 3D software to train machine learning classifiers. This led to the construction of a pragmatic end-to-end workflow that we hope can also be useful for other open source human rights monitoring and research in general.

Developing upon previous research, we used ‘synthetic’ images generated from 3D software to train machine learning classifiers. This led to the construction of a pragmatic end-to-end workflow that we hope can also be useful for other open source human rights monitoring and research in general.

Unreal Engine

Substance Designer

Autodesk Maya